Integration testing is a critical part of software testing.

It ensures that small units work as expected when combined. It’s not enough to have unit tests only because they are too focused on individual parts instead of how they interact with other components in your system.

However, integration testing can be tricky. It requires extra effort from developers who need to figure out what needs to be tested and create test data for each component integrated into their application.

The top 5 integration tests best practices are:

- Don’t test every scenario with integration tests

- Separate unit and integration test suites

- Use a proper naming convention

- Run integration tests as part of the CI/CD process

- Make sure to reset test data between execution

This post will introduce you to 5 simple rules that will make your life easier when dealing with integration testing. Let’s get started!

What is integration testing?

Integration testing is a type of testing that checks how various software modules work together.

The goal is to verify that these individual components can function together in a larger system. System integration testing takes place after unit testing and before acceptance testing.

This testing approach makes it possible to identify integration errors early on before releasing the latest code change into production. They also help find issues that unit testing may have missed.

What does integration testing involve?

The integration testing approach typically involves creating test objects that represent external dependencies (e.g., databases) and then exercising code paths that involve these dependencies.

Over the years, integration testing has become an increasingly more important part of the software development process. Integration tests are quite time-consuming to create and maintain, but they are essential in quality assurance (QA) for many software projects.

Integration tests can be considered an extension of unit tests because they focus on cross-cutting concerns such as data validation, security, and performance. These concerns cannot be covered in a unit test without introducing too much complexity into the codebase and slowing down development time significantly.

By running integration tests, you can verify if the system works as a whole, which is impossible with unit tests.

Since integration tests cover a larger part of the application, they are usually slower. The slowness is introduced because integration tests can check different things, such as:

- whether or not the database contains correct data after performed action

- calling a network endpoint and waiting for a response

- interacting with a file system

What are integration testing types?

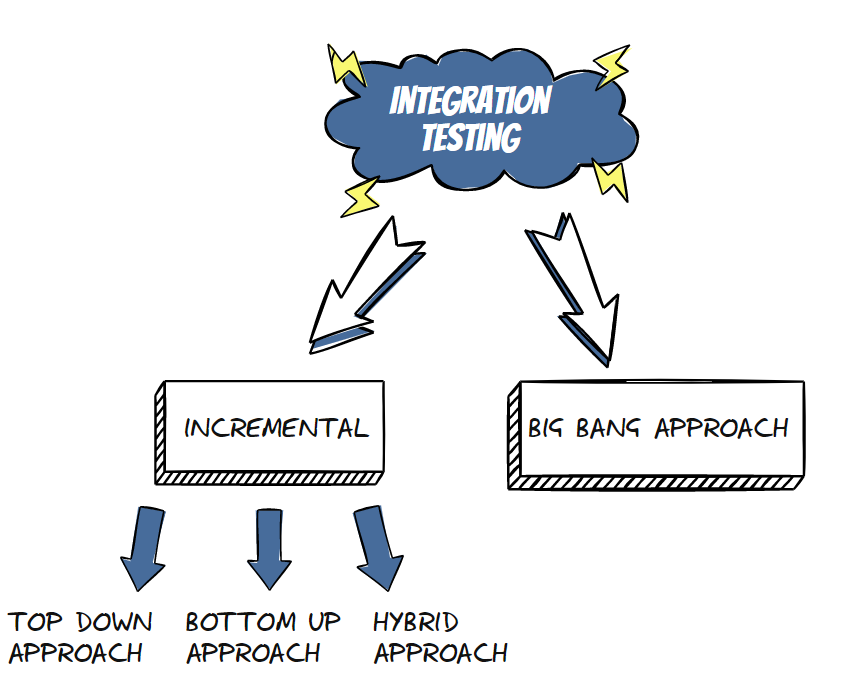

Like any other testing, you can perform integration testing in different ways:

- Incremental integration testing – gradually combine and test the interaction between integrated units until you cover the whole code base. You can perform it as top-down testing (starting from higher-level modules), bottom-up testing (starting with lower-level modules first), or a hybrid approach.

- Big bang integration testing – all modules and components are integrated and tested as a whole.

As with everything else, knowing integration test best practices is essential to get the most out of your testing effort.

Don’t test every scenario with integration tests

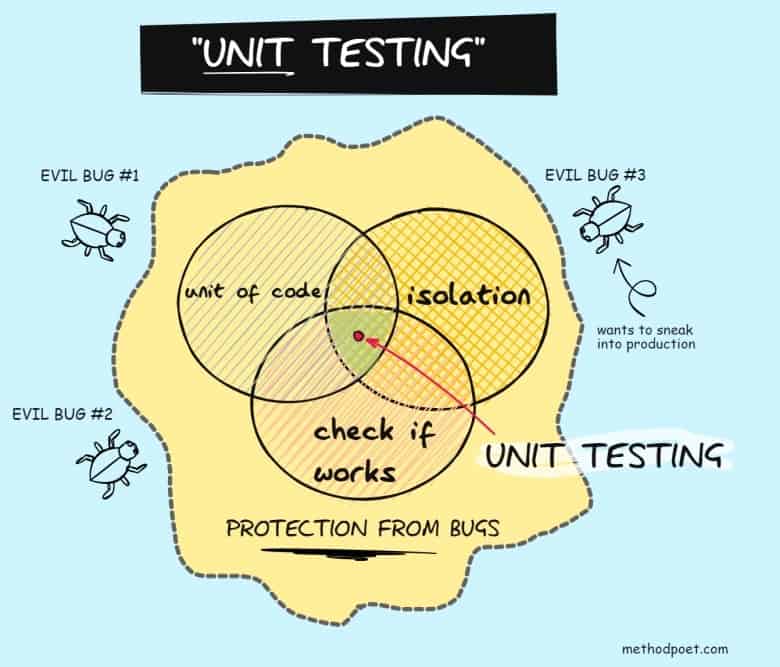

Every enterprise application has a lot of business rules. It also has a lot of validations in place and different edge cases that you may run into. You need to be aware of all of that and use unit tests to check all those cases.

Why use unit tests and not integration tests? Well, it’s because unit tests are faster, and they should cover more business logic in the code than integration tests. Since every unit test has a specific scope, finding and fixing the problem is easier when a unit test fails.

However, when you use the right combination of unit testing and integration testing, easier to identify and fix errors in the code during the development process, leading to a more robust and reliable application.

Integration tests are slower because of the database access, file system access, or third-party APIs they may use. So you must ensure that each test adds value by testing a unique and important aspect of your system.

Separate unit test suite from the integration test suite

As I already mentioned above, unit tests are fast, and integration tests are slow.

Therefore, you should separate unit and integration test suites. If you combine them in a single test suite or a single test project, you will very likely hurt your productivity.

Imagine this:

- You are working on a new feature.

- You make some changes and run the tests.

- If unit and integration tests are combined, you will have to wait much longer than you would if you only execute unit tests. While waiting, you might open a web browser, check the news, or scroll through the social feed. The next thing you know, it’s half an hour later, and you haven’t moved too far because your focus wandered off your primary task.

The solution is to use separate projects. That way, you can move quickly during the development phase. You can make the change, run the unit tests. You will get almost instant feedback. Once you finish the development, execute the integration tests before pushing the code for the code review.

Use a proper naming convention

The proper naming convention is vital in unit testing. The same goes for integration tests. A good test method starts with a good name. Therefore, when you start to write integration tests, the naming convention must make sense.

There are many different kinds of conventions out there. The one I use and recommend is Feature_ExpectedBehavior_ScenarioUnderTest. Explanation of the parts:

- Feature – represents one module or multiple unit units combined. We want to check that the module works correctly at the integration level.

- ExpectedBehavior – represents the expected output once we put the feature through the testing scenario.

- ScenarioUnderTest – represents the scenario or circumstances under which the test occurs.

An example of the above method naming convention:

- EmailService_sends_email_when_new_user_registers

In the above example:

- EmailService represents a feature

- sends_email is an expected behavior

- when_new_user_registers is the scenario under test

As you can see, if the above test fails, you will immediately know which feature doesn’t work by the test name.

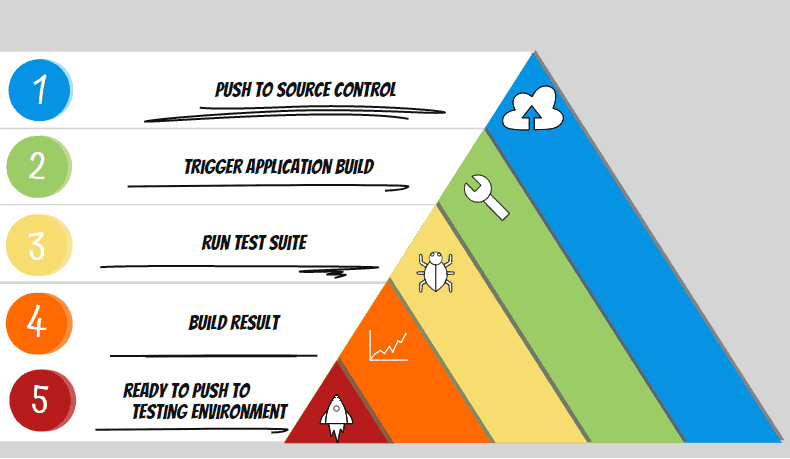

Run integration tests as part of the CI/CD process

One of the best practices for integration testing is to run them as part of the CI/CD process.

You want to make sure that you are not spending time waiting for tests to finish when you are developing a feature. But when you execute the tests during the continuous integration process, you run them in a dedicated environment without waiting for the tests to finish.

This way, you will know when the latest software release has issues that must be addressed. One of the benefits of having the CI environment is improved code quality.

Automated testing also means you need to spend less time on manual testing.

Make sure you run your test automation in an environment that replicates production as closely as possible. If you are running the tests on an environment that is not similar to your production servers, you may get some nasty bugs that slip through the testing phase into production.

Make sure to reset test data between execution

Since integration test cases depend on the outside environment, resetting test data each time before executing a test case is crucial. It also helps with debugging since it’s easier to figure out where a bug came from when you know all the states should be clean.

You can then go back into that section or module for more detailed testing if needed.

Resetting the test data will prevent so-called flaky tests. Flaky tests fail randomly during the test execution, usually not because of a bug in the production code. If you have flaky tests, they likely depend on some state from other integration test execution.

So, what integration testing tools can you use to ensure clean databases?

For example, you can use:

- Respawn – a small tool that will reset database tables between test execution.

- Test Containers – it supports throwaway Docker instances of all major database providers.

Conclusion

Unit testing is important, but it’s not the only way to test and ensure the quality of your code. When developing new features or coding existing ones, take some time out for integration tests.

Integration tests should be run as part of the CI/CD process to reduce wait times when executing them in an environment that replicates production closely enough without introducing its own set of bugs into the equation. In addition, resetting between execution will help prevent flaky tests from occurring due to dependencies on other parts of your system, like resetting data does before each new test method executes.

Following the best practices and integration tests will help you identify integration issues as quickly as possible.

FAQ

What should integration tests cover?

Integration tests cover integration between various modules of your application. Therefore, for integration tests to be practical, they should cover as many integration points as possible.

Should integration tests be independent?

Yes. Integration tests should be independent because they should run without any dependencies on other integration tests. This will make them easy to debug and maintain.

Who is responsible for integration testing?

Who is responsible depends on the team. But developers are usually the ones who are responsible for writing and maintaining integration tests.

Is end-to-end testing the same as integration testing?

No. End-to-end testing, also known as e2e testing, means that you test your system as a whole. They check the software from the end user’s perspective. E2E tests represent the top of the testing pyramid.